iPerf is a versatile and powerful tool that has become essential for network administrators and IT professionals alike. Designed to measure the performance and throughput of a network, iPerf provides valuable insights into network bandwidth capacity, latency, and packet loss. By simulating real-world traffic conditions, iPerf enables users to assess network performance, identify bottlenecks, and optimize their infrastructure. In this article, we explore how iPerf works and delve into a practical use case to understand its effectiveness in network diagnostics and optimization.

What is iPerf?

iPerf is a simple, free, cross-platform and commonly-used tool for network performance measurement and testing. It supports several protocols (TCP, UDP, SCTP with IPv4 and IPv6) and parameters. iPerf is available for multiple operating systems such as Linux or Windows. It is used by network administrators and engineers to diagnose network issues, optimize performance, and conduct network experiments. iPerf is also used in performance testing and benchmarking of network devices.

Moreover, iPerf can be simply used for network stress testing. For this purpose users should choose the UDP protocol, because TCP automatically carries out rate-limiting to adapt to the available bandwidth. To do it correctly, the user must choose a bandwidth far above what the connection can handle. For example, if a user wants to stress a 10 Mbps connection, they should send about 100 Mbps traffic (using -b parameter).

iPerf usage

It is possible to automate network bandwidth measurements using iPerf. In my personal opinion, Python is one of the best choices for this purpose. If we want to use iPerf2 we can use pyperf2 (keep in mind this is 0.2 version) or build a script which runs iPerf script from the command line and collect the output (in this case -y parameter could be useful - report as a comma-separated values).

For iPerf3, a Python module is available here . It is still in its initial version but can be useful for simple measurements. If you want to explore this topic, please check this post

about automating bandwidth testing.

iPerf could be used to saturate high speed links, but we must bear in mind that iPerf needs a lot of resources like CPU and memory. Theoretically, iPerf could be used to test links up to 100Gbps, but at such high values a better idea is to use professional, dedicated traffic generators. Moreover, achieving this high network speed often requires the use of specialized hardware and network configurations, such as link aggregation (LAG).

iPerf - the most interesting options

| Option | Description |

|---|---|

| -i, --interval | Sets the interval time in seconds between periodic bandwidth, jitter, and loss reports. If non-zero, a report is made every interval of the bandwidth since the last report. If zero, no periodic reports are printed. Default is zero. |

| -l, --len | The length of buffers to read or write. |

| -u, --udp | Use UDP rather than TCP. |

| -B, --bind | Bind to host one of this machine's addresses. For the client, this sets the outbound interface. For a server, this sets the incoming interface. |

| -d, --dualtest | ONLY iPerf2! Run iPerf in dual testing mode. This will cause the server to connect back to the client on the port specified in the -L option (or defaults to the port the client connected to the server on). This is done immediately, therefore running the tests simultaneously. If you want an alternating test, try -r. |

| -P, --parallel | The number of simultaneous connections to make to the server. Default is 1. |

| -t, --time | The time in seconds to transmit for. iPerf normally works by repeatedly sending an array of len bytes for time seconds. Default is 10 seconds. |

| -f, --format | A letter specifying the format to print bandwidth numbers in. Supported formats are different for iPerf2 and iPerf3. |

| -J, --json | ONLY for iPerf3. Output in JSON format. |

| -y | ONLY for iPerf2. Report with comma-separated values. |

Differences between iPerf2 and iPerf3

iPerf is available in two versions: iPerf2 and iPerf3. Users have to remember this and choose the correct version. iPerf3 was introduced in 2014. It is a rewrite from scratch and not backwards compatible with iPIperf2. The goal was to create a smaller and simpler code base. Currently iPerf2 and iPerf3 are maintained in parallel by two different teams. Here are some of the main differences between the two versions:

| iPerf2 | iPerf3 |

|---|---|

| Multi-threaded | Single-thread |

| Supports TCP and UDP protocols | Supports TCP, UDP, and SCTP protocols |

| CSV format output (-y parameter) | Supports JSON output format |

| -d parameter: Supports a bidirectional test which performs tests from both the client and server |

It is hard to say which version is better, the user has to make a choice based on their needs and required features. One thing is for sure, if you want to test parallel stream performance, you should use a multi-threaded tool, and in this case iPerf2 will be a better choice.

Pros and cons of iPerf

As I mentioned, iPerf is a widely-used tool by students as well as engineers, but like any tool it has pros and cons. Below I have listed some of them:

iPerf pros

- Supports most popular protocols like TCP, UDP and SCTP. It can be used to test e.g. bandwidth, latency or packet loss.

- iPerf is cross-platform - can be used on Linux, Windows, MacOS, Android, x86, ARM and different network devices.

- iPerf has a simple command-line interface that allows users to quickly start testing their network.

- It is free - anyone can download iPerf and use it to test their own topology.

- It is open-source - anyone can clone and optimize it.

- iPerf is pre-installed on selected systems and devices, for example on TrueNAS.

iPerf cons

- Poor community support.

- Existing bugs are not addressed and fixed.

- We have to monitor resources to ensure that reported values are correct and that no throttling occurs.

- Resources should be manually assigned to prevent concurrency between iPerf and other tasks performed by the OS.

- iPerf has no option to generate reports. Users have to use additional tools to generate reports - currently there are no available valuable tools worth recommending, so the best way is to do it by oneself.

- Using iPerf requires basic networking knowledge from the user which could be challenging for some.

- iPerf does not provide many configuration options to set up packets and to control how packets should be sent.

- iPerf3 is not backward compatible with iPerf2.

- iPerf needs a lot of resources when generating high volumes of traffic. CPU power and memory will be very loaded. CPU isolation is good to avoid given that iPerf will share CPU with other tasks on the server, but still we have to monitor resources and tweak the operating system to get maximum performance - e.g. set the governor in performance mode.

iPerf use case

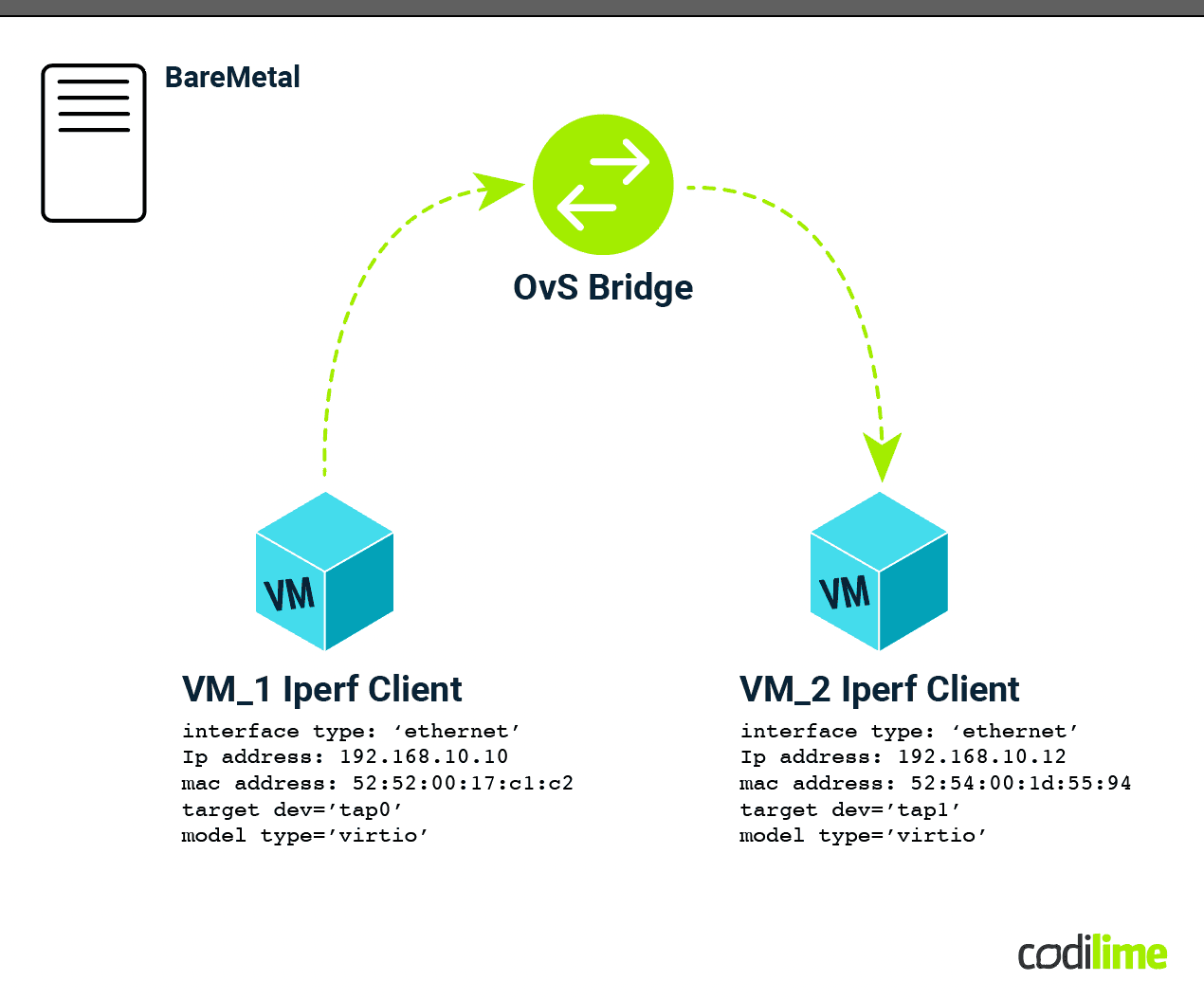

Let's check out how to use iPerf and create a simple topology with two virtual machines connected via an OvS bridge (virtual switch) to simulate switch performance test. In this use case we are going to check simple outputs for TCP and UDP traffic without any special configuration, compare, and explain.

I will use the following configuration:

-

OvS (Open vSwitch) will be used to simulate and check a production available software switch. If you want to know more about OvS, please check the documentation

.

-

iPerf2, because it’s the commonly used and most versatile version, but both iPerf2 and iPerf3 tools can be used here.

-

Two virtual machines with CentOS7 OS and the following interfaces:

-

VM_1 - eth1:

- interface type: 'ethernet'

- Ip address: 192.168.10.10

- mac address: 52:54:00:17:c1:2c

- target dev='tap0'

- model type='virtio’

-

VM_2 - eth1:

- interface type: 'ethernet'

- Ip address: 192.168.10.12

- mac address: 52:54:00:d1:55:94

- target dev='tap1'

- model type='virtio’

-

We have two virtual machines with nine virtual cores, CentOS operating system, and have installed the iPerf 2.1.7 tool.

We can check the IP addresses of interfaces connected to the OvS:

[root@vm_1 ~]# ip a

…

…

…

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:17:c1:2c brd ff:ff:ff:ff:ff:ff

inet 192.168.10.10/24 scope global eth1

valid_lft forever preferred_lft forever[root@vm_2 ~]# ip a

…

…

…

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:d1:55:94 brd ff:ff:ff:ff:ff:ff

inet 192.168.10.12/24 scope global eth1

valid_lft forever preferred_lft foreverOvS is simply configured to switch packets from one port to another.

# CREATE TAP INTERFACES

ip tuntap add tap0 mode tap

ip link set dev tap0 up

ip tuntap add tap1 mode tap

ip link set dev tap1 up

# DELETE OVS BRIDGE IF EXISTS

ovs-vsctl --if-exists del-br br0

# CREATE OVS BRIDGE AND ADD PORTS

ovs-vsctl add-br br0

ovs-vsctl add-port br0 tap0

ovs-vsctl add-port br0 tap1

# CREATE OVS FLOWS - PACKETS FROM TAP_0 SHOULD BE SEND VIA TAP_1 AND VICE VERSA

ovs-ofctl del-flows br0

ovs-ofctl add-flow br0 in_port=tap0,actions=output:tap1

ovs-ofctl add-flow br0 in_port=tap1,actions=output:tap0Let’s run iPerf Client on VM_1 and iPerf Server on VM_2 using the following commands:

For TCP traffic:

[root@vm_2 ~]# iperf -s -p 45678 -t 32 -y C -P 1

[root@vm_1 ~]# iperf -p 45678 -c 192.168.10.12 -t 30 -y C -P 1

For UDP traffic:

[root@vm_2 ~]# iperf -s -p 45678 -t 32 -y C -P 1 -u

[root@vm_1 ~]# iperf -p 45678 -c 192.168.10.12 -t 30 -y C -P 1 -uResults of iPerf use case

When we check the results, we get the following output:

[root@vm_2 ~]# iperf -s -p 45678 -t 32 -P 1

------------------------------------------------------------

Server listening on TCP port 45678

TCP window size: 85.3 KByte (default)

------------------------------------------------------------

[ 1] local 192.168.10.12 port 45678 connected with 192.168.10.10 port 35786 (icwnd/mss/irtt=14/1448/0)

[ ID] Interval Transfer Bandwidth

[ 1] 0.00-30.00 sec 149 GBytes 42.7 Gbits/sec

############################################################################################

[root@vm_1 ~]# iperf -p 45678 -c 192.168.10.12 -t 30 -P 1

------------------------------------------------------------

Client connecting to 192.168.10.12, TCP port 45678

TCP window size: 85.0 KByte (default)

------------------------------------------------------------

[ 1] local 192.168.10.10 port 35786 connected with 192.168.10.12 port 45678 (icwnd/mss/irtt=14/1448/251)

[ ID] Interval Transfer Bandwidth

[ 1] 0.00-30.02 sec 149 GBytes 42.7 Gbits/sec[root@vm_2 ~]# iperf -s -p 45678 -t 32 -P 1 -u

------------------------------------------------------------

Server listening on UDP port 45678

UDP buffer size: 208 KByte (default)

------------------------------------------------------------

[ 1] local 192.168.10.12 port 45678 connected with 192.168.10.10 port 55599

[ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams

[ 1] 0.00-30.01 sec 3.75 MBytes 1.05 Mbits/sec 0.001 ms 0/2678 (0%)

############################################################################################

[root@vm_1 ~]# iperf -p 45678 -c 192.168.10.12 -t 30 -P 1 -u

------------------------------------------------------------

Client connecting to 192.168.10.12, UDP port 45678

Sending 1470 byte datagrams, IPG target: 11215.21 us (kalman adjust)

UDP buffer size: 208 KByte (default)

------------------------------------------------------------

[ 1] local 192.168.10.10 port 55599 connected with 192.168.10.12 port 45678

[ ID] Interval Transfer Bandwidth

[ 1] 0.00-30.01 sec 3.75 MBytes 1.05 Mbits/sec

[ 1] Sent 2679 datagrams

[ 1] Server Report:

[ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams

[ 1] 0.00-30.01 sec 3.75 MBytes 1.05 Mbits/sec 0.000 ms 0/2678 (0%)We can observe that TCP is much faster than UDP. This happens for several reasons:

- TCP is offloaded in the kernel (way faster than userspace)

- TCP is often accelerated in HW

- UDP implementation of iPerf is not ideal

This is a simple use case which does not exhaust the possibilities offered by the iPerf tool. It could be used for a lot of different and more complicated cases, for example we can check packet loss or check how long it took to switch active-backup - in this case, the user can start iPerf with 1000 PPS UDP, and set reporting to a 1 second gap. In case of failure it is easy to check how long it takes, e.g 100 packets = 100 ms.

Comparison to other traffic generators

On the market we have few options to consider:

- Professional hardware traffic generators

With this option we are able to buy a dedicated autonomous and tested device with dedicated software which is responsible for generating specified traffic. These devices are very precise and are a good but also very expensive choice. What is more, the user has to also pay for support and technical training for the people who will be responsible for use and setup. On the other hand HW traffic generators guarantee reliability and provide a lot of additional features like, for example, manipulating network traffic by modifying packet headers, payload, and other attributes, or analyzing network traffic in real-time.

- Software traffic generators

There are multiple paid and free solutions to consider (paid: IXIA, Solarwinds WAN Killer; free: TRex, Ostinato). Of course in this case we need a dedicated server to install such a traffic generator, as for iPerf. One of the free and open-source choices could be TRex created by Cisco. In my opinion it is much better than iPerf as a traffic generator, because:

- It has a dedicated Python API, which is great for automation.

- The user is able to create different streams with millions of different flows.

- It allows you to collect more statistics.

- The community and discussion groups work efficiently.

- It is still developed and any bugs are removed on an ongoing basis.

- It is based on DPDK, and because of that TRex is able to achieve PPS (packet per second) values unachievable for iPerf.

- Some NICs have hardware acceleration and because of that the user is able to achieve much greater effectiveness.

More information about the TRex traffic generator can be found in our article about a traffic generator for measuring network performance and here .

- Pkt-gen

This is an open-source software tool that was originally created by the Intel Corporation as part of their DPDK (Data Plane Development Kit) project. DPDK is a set of libraries and drivers for fast packet processing on Intel processors, and Pktgen is one of the tools in the DPDK suite that helps with packet generation and testing. It is capable of generating 10Gbit wire rate traffic with 64 byte frames and can handle packets with UDP, TCP, ARP, ICMP, GRE, MPLS and Queue-in-Queue. The project is open-source and community-driven, with contributions and support from various developers and organizations in the networking industry. More information about this tool can be found in the official documentation .

To sum up, iPerf should be considered as a good choice if you want to simply test network throughput without specialized knowledge about networking and programming. It can be easily installed on various devices and users are able to quickly check the status of the network. On the other hand, it has some disadvantages, as mentioned in this article, so if you are looking for a professional traffic generator you should consider either a more expensive, dedicated HW device or advanced software traffic generator; either of which will require some development work to set up and run properly.

Conclusion

In summary, iPerf is a versatile and powerful tool for testing network performance. It can simulate various network conditions, support multiple protocols, and provides many configuration options. A huge advantage is that iPerf is free and open-source, but we have to remember that iPerf also has some weaknesses. Among others, it requires a lot of resources, has poor community support, has many unaddressed issues, and some CLI and networking skills are necessary to use it properly. It is a good tool, for example, to carry out smoke or sanity network tests but if you want a more advanced traffic generator with the capability to generate more PPS, you should consider highly-developed software traffic generators or HW solutions.